IDM Master’s Thesis (Spring ’24)

An interactive & immersive experience about AI imaginaries

My Role: Experience Designer & Creative Technologist

Duration: 3 months

Platform: Installation

Tools: TouchDesigner

Team: Solo

AI Through Your Lens

An interactive & immersive experience about AI imaginaries

My Role: Experience Designer & Creative Technologist

Duration: 3 months

Platform: Installation

Tools: TouchDesigner

Team: Solo

How has AI been perceived by humans?

Situated at the intersection of creative AI and human-AI interaction, this thesis aims to explore people’s perspectives on AI and the influence of AI-generated data on those perspectives. Drawing from Jean-Paul Sartre’s philosophy of the imaginary, I explore how images and imagination can shape individuals’ perceptions of AI.

“AI Through Your Lens” is an interactive experience of AI imaginaries that invites participants to explore their perceptions of AI through a humanistic lens. This project seeks to challenge conventional notions of AI and foster discussions on whether AI is often limited to uniform aesthetic possibilities or can instead be portrayed in a diverse range of representations.

Objective

To explore how individuals engage with and respond to AI data, aiming to understand the mutual influence between human perception and AI

What is AI imaginaries?

The term AI Imaginaries in this thesis is mainly inspired by Benabdallah’s “A Notebook of Data Imaginaries,” which highlights the opacity of data collection and transformation processes through creative descriptions and visualizations.

Benabdallah described imaginaries as:

“

A fertile terrain for designers, as they present a rich expanse of aesthetic possibilities and sensory information that can open up new vistas of intervention and interaction.

”

Space

To provide a fully immersive experience, I envisioned a private room with dual projections of AI-generated data on the walls. The enclosed space allows for integrated sound and gives visitors the freedom to explore the art at their own pace.

To provide a fully immersive experience, I envisioned a private room with dual projections of AI-generated data on the walls. The enclosed space allows for integrated sound and gives visitors the freedom to explore the art at their own pace.

Interaction

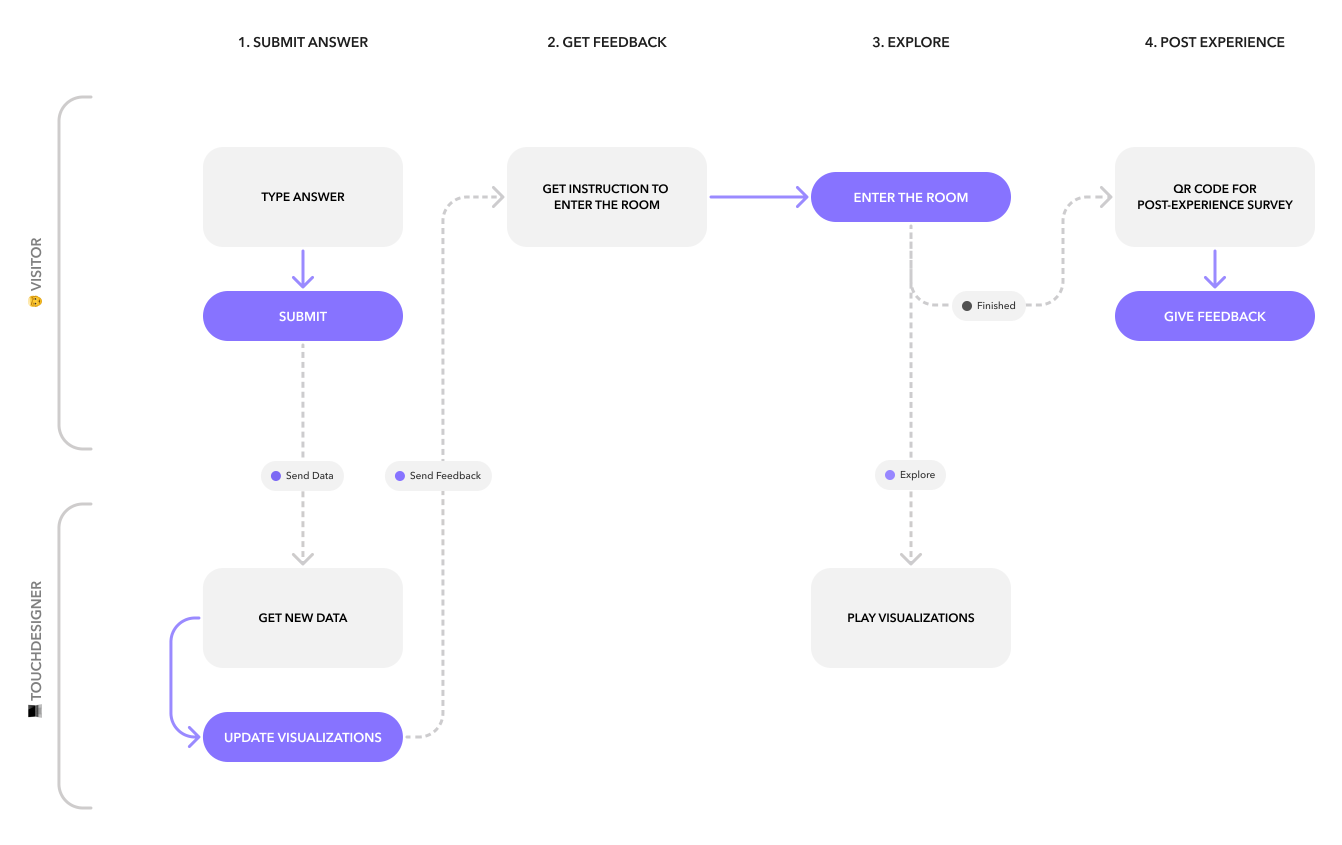

The sequences of the experience were designed to understand the visitors’ perspective of AI first without them seeing answers from the others. Therefore, the experience begins with an interactive segment where visitors respond to a prompt on a tablet: “If AI can be anything in this world, what would AI look like?” Their responses are then transmitted to TouchDesigner via WebSockets, a computer communications protocol for simultaneous two-way communication.

The sequences of the experience were designed to understand the visitors’ perspective of AI first without them seeing answers from the others. Therefore, the experience begins with an interactive segment where visitors respond to a prompt on a tablet: “If AI can be anything in this world, what would AI look like?” Their responses are then transmitted to TouchDesigner via WebSockets, a computer communications protocol for simultaneous two-way communication.

Visual

The visual component includes text bubbles displaying input data from the visitors and AI-generated artworks derived from that data. This aims to immerse the audience in the world of AI imaginaries, encouraging reflection on their perceptions.

The visual component includes text bubbles displaying input data from the visitors and AI-generated artworks derived from that data. This aims to immerse the audience in the world of AI imaginaries, encouraging reflection on their perceptions.

Sound

I used MusicFX, a generative AI text-to-music, to create a 30-second sound. The prompt was “a sound that mimics machine,” and I experimented with different music genres, beat, tone, and bpm.

I used MusicFX, a generative AI text-to-music, to create a 30-second sound. The prompt was “a sound that mimics machine,” and I experimented with different music genres, beat, tone, and bpm.

The Prototype

Interactive Data Visualization

![]()

To create an interactive data visualization, I used WebSockets to receive text data from the website hosted on Glitch (ai-imaginaries.glitch.me) and send it to TouchDesigner. The input is stored in JSON format and converted into a table in TouchDesigner. I used Geo Text COMP to visualize each AI imaginary keyword with different text sizes corresponding to the number of repetitive keywords received. Once the visitor submits their response on the website, the data will simultaneously appear on the screen.

To create an interactive data visualization, I used WebSockets to receive text data from the website hosted on Glitch (ai-imaginaries.glitch.me) and send it to TouchDesigner. The input is stored in JSON format and converted into a table in TouchDesigner. I used Geo Text COMP to visualize each AI imaginary keyword with different text sizes corresponding to the number of repetitive keywords received. Once the visitor submits their response on the website, the data will simultaneously appear on the screen.

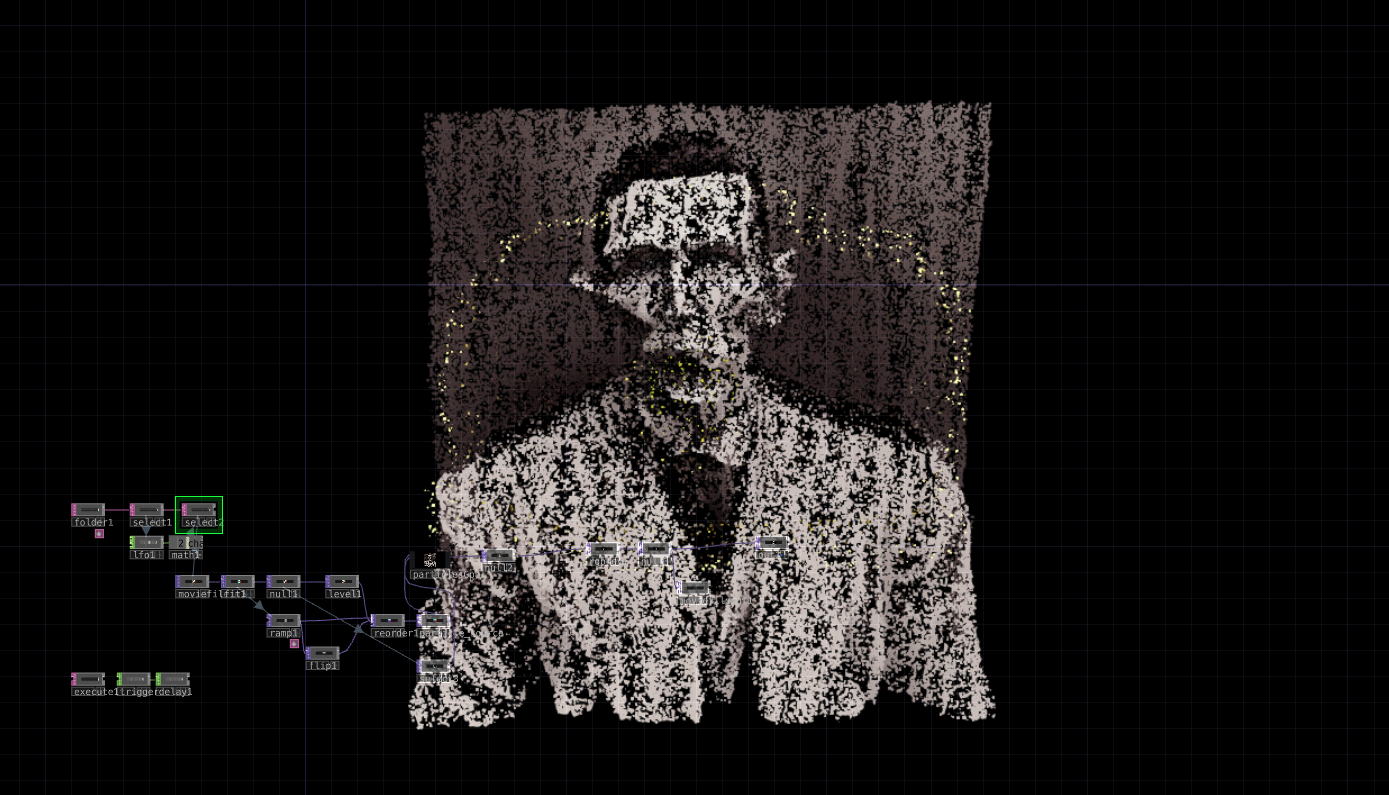

AI-Generated Data Visualization

![]()

To create real-time AI-generated images, I connected Python with WebSockets. Upon triggering Stable Diffusion through Python, images are generated and stored locally based on received text inputs. Subsequently, I linked TouchDesigner to the directory housing these AI-generated images for the projection-based visualization.

To create real-time AI-generated images, I connected Python with WebSockets. Upon triggering Stable Diffusion through Python, images are generated and stored locally based on received text inputs. Subsequently, I linked TouchDesigner to the directory housing these AI-generated images for the projection-based visualization.

Experience Design

![]()

In front of the exhibit room, the visitors begin their experience by responding to a prompt on a tablet: “If AI can be anything in this world, what would AI look like?” Inside the room, there are two visualizations, text bubbles and AI-generated visuals, projected onto two sides of the room. The visitors are able to walk around the room and immerse themselves in the visualizations. The equipment used for this experience includes two projectors, a tablet to get real-time responses, a laptop to run TouchDesigner code, and a curtain as a surface for projection-based art.

In front of the exhibit room, the visitors begin their experience by responding to a prompt on a tablet: “If AI can be anything in this world, what would AI look like?” Inside the room, there are two visualizations, text bubbles and AI-generated visuals, projected onto two sides of the room. The visitors are able to walk around the room and immerse themselves in the visualizations. The equipment used for this experience includes two projectors, a tablet to get real-time responses, a laptop to run TouchDesigner code, and a curtain as a surface for projection-based art.